Here at CloudFlare we are heavy users of the github.com/miekg/dns Go DNS library and we make sure to contribute to its development as much as possible. Therefore when Dmitry Vyukov published go-fuzz and started to uncover tens of bugs in the Go standard library, our task was clear.

Hot Fuzz

Fuzzing is the technique of testing software by continuously feeding it inputs that are automatically mutated. For C/C++, the wildly successful afl-fuzz tool by Michał Zalewski uses instrumented source coverage to judge which mutations pushed the program into new paths, eventually hitting many rarely-tested branches.

go-fuzz applies the same technique to Go programs, instrumenting the source by rewriting it (like godebug does). An interesting difference between afl-fuzz and go-fuzz is that the former normally operates on file inputs to unmodified programs, while the latter asks you to write a Go function and passes inputs to that. The former usually forks a new process for each input, the latter keeps calling the function without restarting often.

There is no strong technical reason for this difference (and indeed afl recently gained the ability to behave like go-fuzz), but it's likely due to the different ecosystems in which they operate: Go programs often expose well-documented, well-behaved APIs which enable the tester to write a good wrapper that doesn't contaminate state across calls. Also, Go programs are often easier to dive into and more predictable, thanks obviously to GC and memory management, but also to the general community repulsion towards unexpected global states and side effects. On the other hand many legacy C code bases are so intractable that the easy and stable file input interface is worth the performance tradeoff.

Back to our DNS library. RRDNS, our in-house DNS server, uses github.com/miekgs/dns for all its parsing needs, and it has proved to be up to the task. However, it's a bit fragile on the edge cases and has a track record of panicking on malformed packets. Thankfully, this is Go, not BIND C, and we can afford to recover() panics without worrying about ending up with insane memory states. Here's what we are doing

func ParseDNSPacketSafely(buf []byte, msg *old.Msg) (err error) {

defer func() {

panicked := recover()

if panicked != nil {

err = errors.New("ParseError")

}

}()

err = msg.Unpack(buf)

return

}

We saw an opportunity to make the library more robust so we wrote this initial simple fuzzing function:

func Fuzz(rawMsg []byte) int {

msg := &dns.Msg{}

if unpackErr := msg.Unpack(rawMsg); unpackErr != nil {

return 0

}

if _, packErr = msg.Pack(); packErr != nil {

println("failed to pack back a message")

spew.Dump(msg)

panic(packErr)

}

return 1

}

To create a corpus of initial inputs we took our stress and regression test suites and used github.com/miekg/pcap to write a file per packet.

package main

import (

"crypto/rand"

"encoding/hex"

"log"

"os"

"strconv"

"github.com/miekg/pcap"

)

func fatalIfErr(err error) {

if err != nil {

log.Fatal(err)

}

}

func main() {

handle, err := pcap.OpenOffline(os.Args[1])

fatalIfErr(err)

b := make([]byte, 4)

_, err = rand.Read(b)

fatalIfErr(err)

prefix := hex.EncodeToString(b)

i := 0

for pkt := handle.Next(); pkt != nil; pkt = handle.Next() {

pkt.Decode()

f, err := os.Create("p_" + prefix + "_" + strconv.Itoa(i))

fatalIfErr(err)

_, err = f.Write(pkt.Payload)

fatalIfErr(err)

fatalIfErr(f.Close())

i++

}

}

![]() CC BY 2.0 image by JD Hancock

CC BY 2.0 image by JD Hancock

We then compiled our Fuzz function with go-fuzz, and launched the fuzzer on a lab server. The first thing go-fuzz does is minimize the corpus by throwing away packets that trigger the same code paths, then it starts mutating the inputs and passing them to Fuzz() in a loop. The mutations that don't fail (return 1) and expand code coverage are kept and iterated over. When the program panics, a small report (input and output) is saved and the program restarted. If you want to learn more about go-fuzz watch the author's GopherCon talk or read the README.

Crashes, mostly "index out of bounds", started to surface. go-fuzz becomes pretty slow and ineffective when the program crashes often, so while the CPUs burned I started fixing the bugs.

In some cases I just decided to change some parser patterns, for example reslicing and using len() instead of keeping offsets. However these can be potentially disrupting changes—I'm far from perfect—so I adapted the Fuzz function to keep an eye on the differences between the old and new, fixed parser, and crash if the new parser started refusing good packets or changed its behavior:

func Fuzz(rawMsg []byte) int {

var (

msg, msgOld = &dns.Msg{}, &old.Msg{}

buf, bufOld = make([]byte, 100000), make([]byte, 100000)

res, resOld []byte

unpackErr, unpackErrOld error

packErr, packErrOld error

)

unpackErr = msg.Unpack(rawMsg)

unpackErrOld = ParseDNSPacketSafely(rawMsg, msgOld)

if unpackErr != nil && unpackErrOld != nil {

return 0

}

if unpackErr != nil && unpackErr.Error() == "dns: out of order NSEC block" {

// 97b0a31 - rewrite NSEC bitmap [un]packing to account for out-of-order

return 0

}

if unpackErr != nil && unpackErr.Error() == "dns: bad rdlength" {

// 3157620 - unpackStructValue: drop rdlen, reslice msg instead

return 0

}

if unpackErr != nil && unpackErr.Error() == "dns: bad address family" {

// f37c7ea - Reject a bad EDNS0_SUBNET family on unpack (not only on pack)

return 0

}

if unpackErr != nil && unpackErr.Error() == "dns: bad netmask" {

// 6d5de0a - EDNS0_SUBNET: refactor netmask handling

return 0

}

if unpackErr != nil && unpackErrOld == nil {

println("new code fails to unpack valid packets")

panic(unpackErr)

}

res, packErr = msg.PackBuffer(buf)

if packErr != nil {

println("failed to pack back a message")

spew.Dump(msg)

panic(packErr)

}

if unpackErrOld == nil {

resOld, packErrOld = msgOld.PackBuffer(bufOld)

if packErrOld == nil && !bytes.Equal(res, resOld) {

println("new code changed behavior of valid packets:")

println()

println(hex.Dump(res))

println(hex.Dump(resOld))

os.Exit(1)

}

}

return 1

}

I was pretty happy about the robustness gain, but since we used the ParseDNSPacketSafely wrapper in RRDNS I didn't expect to find security vulnerabilities. I was wrong!

DNS names are made of labels, usually shown separated by dots. In a space saving effort, labels can be replaced by pointers to other names, so that if we know we encoded example.com at offset 15, www.example.com can be packed as www. + PTR(15). What we found is a bug in handling of pointers to empty names: when encountering the end of a name (0x00), if no label were read, "." (the empty name) was returned as a special case. Problem is that this special case was unaware of pointers, and it would instruct the parser to resume reading from the end of the pointed-to empty name instead of the end of the original name.

For example if the parser encountered at offset 60 a pointer to offset 15, and msg[15] == 0x00, parsing would then resume from offset 16 instead of 61, causing a infinite loop. This is a potential Denial of Service vulnerability.

A) Parse up to position 60, where a DNS name is found

| ... | 15 | 16 | 17 | ... | 58 | 59 | 60 | 61 |

| ... | 0x00 | | | ... | | | ->15 | |

------------------------------------------------->

B) Follow the pointer to position 15

| ... | 15 | 16 | 17 | ... | 58 | 59 | 60 | 61 |

| ... | 0x00 | | | ... | | | ->15 | |

^ |

------------------------------------------

C) Return a empty name ".", special case triggers

D) Erroneously resume from position 16 instead of 61

| ... | 15 | 16 | 17 | ... | 58 | 59 | 60 | 61 |

| ... | 0x00 | | | ... | | | ->15 | |

-------------------------------->

E) Rinse and repeat

We sent the fixes privately to the library maintainer while we patched our servers and we opened a PR once done. (Two bugs were independently found and fixed by Miek while we released our RRDNS updates, as it happens.)

Not just crashes and hangs

Thanks to its flexible fuzzing API, go-fuzz lends itself nicely not only to the mere search of crashing inputs, but can be used to explore all scenarios where edge cases are troublesome.

Useful applications range from checking output sanity by adding crashing assertions to your Fuzz() function, to comparing the two ends of a unpack-pack chain and even comparing the behavior of two different versions or implementations of the same functionality.

For example, while preparing our DNSSEC engine for launch, I faced a weird bug that would happen only on production or under stress tests: NSEC records that were supposed to only have a couple bits set in their types bitmap would sometimes look like this

deleg.filippo.io. IN NSEC 3600 \000.deleg.filippo.io. NS WKS HINFO TXT AAAA LOC SRV CERT SSHFP RRSIG NSEC TLSA HIP TYPE60 TYPE61 SPF

The catch was that our "pack and send" code pools []byte buffers to reduce GC and allocation churn, so buffers passed to dns.msg.PackBuffer(buf []byte) can be "dirty" from previous uses.

var bufpool = sync.Pool{

New: func() interface{} {

return make([]byte, 0, 2048)

},

}

[...]

data := bufpool.Get().([]byte)

defer bufpool.Put(data)

if data, err = r.Response.PackBuffer(data); err != nil {

However, buf not being an array of zeroes was not handled by some github.com/miekgs/dns packers, including the NSEC rdata one, that would just OR present bits, without clearing ones that are supposed to be absent.

case `dns:"nsec"`:

lastwindow := uint16(0)

length := uint16(0)

for j := 0; j < val.Field(i).Len(); j++ {

t := uint16((fv.Index(j).Uint()))

window := uint16(t / 256)

if lastwindow != window {

off += int(length) + 3

}

length = (t - window*256) / 8

bit := t - (window * 256) - (length * 8)

msg[off] = byte(window) // window #

msg[off+1] = byte(length + 1) // octets length

// Setting the bit value for the type in the right octet

---> msg[off+2+int(length)] |= byte(1 << (7 - bit))

lastwindow = window

}

off += 2 + int(length)

off++

}

The fix was clear and easy: we benchmarked a few different ways to zero a buffer and updated the code like this

// zeroBuf is a big buffer of zero bytes, used to zero out the buffers passed

// to PackBuffer.

var zeroBuf = make([]byte, 65535)

var bufpool = sync.Pool{

New: func() interface{} {

return make([]byte, 0, 2048)

},

}

[...]

data := bufpool.Get().([]byte)

defer bufpool.Put(data)

copy(data[0:cap(data)], zeroBuf)

if data, err = r.Response.PackBuffer(data); err != nil {

Note: a recent optimization turns zeroing range loops into memclr calls, so once 1.5 lands that will be much faster than copy().

But this was a boring fix! Wouldn't it be nicer if we could trust our library to work with any buffer we pass it? Luckily, this is exactly what coverage based fuzzing is good for: making sure all code paths behave in a certain way.

What I did then is write a Fuzz() function that would first parse a message, and then pack it to two different buffers: one filled with zeroes and one filled with 0xff. Any differences between the two results would signal cases where the underlying buffer is leaking into the output.

func Fuzz(rawMsg []byte) int {

var (

msg = &dns.Msg{}

buf, bufOne = make([]byte, 100000), make([]byte, 100000)

res, resOne []byte

unpackErr, packErr error

)

if unpackErr = msg.Unpack(rawMsg); unpackErr != nil {

return 0

}

if res, packErr = msg.PackBuffer(buf); packErr != nil {

return 0

}

for i := range res {

bufOne[i] = 1

}

resOne, packErr = msg.PackBuffer(bufOne)

if packErr != nil {

println("Pack failed only with a filled buffer")

panic(packErr)

}

if !bytes.Equal(res, resOne) {

println("buffer bits leaked into the packed message")

println(hex.Dump(res))

println(hex.Dump(resOne))

os.Exit(1)

}

return 1

}

I wish here, too, I could show a PR fixing all the bugs, but go-fuzz did its job even too well and we are still triaging and fixing what it finds.

Anyway, once the fixes are done and go-fuzz falls silent, we will be free to drop the buffer zeroing step without worry, with no need to audit the whole codebase!

Do you fancy fuzzing the libraries that serve 43 billion queries per day? We are hiring in London, San Francisco and Singapore!

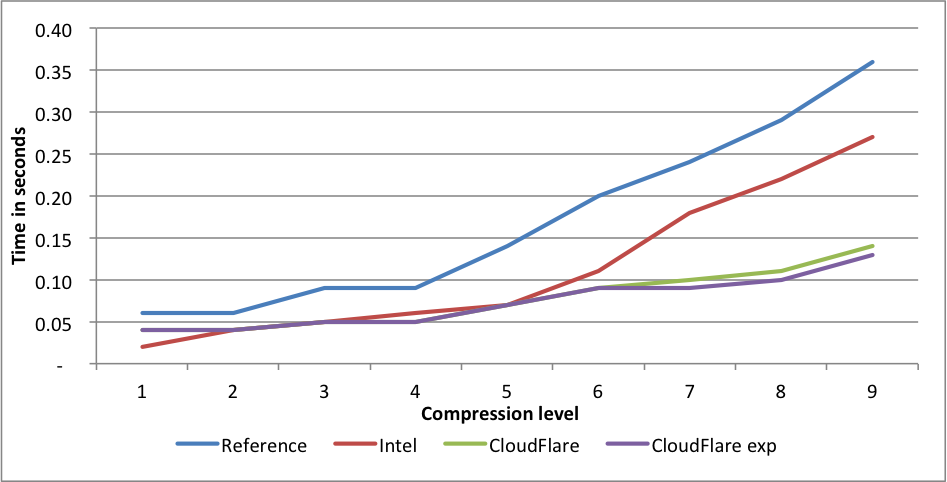

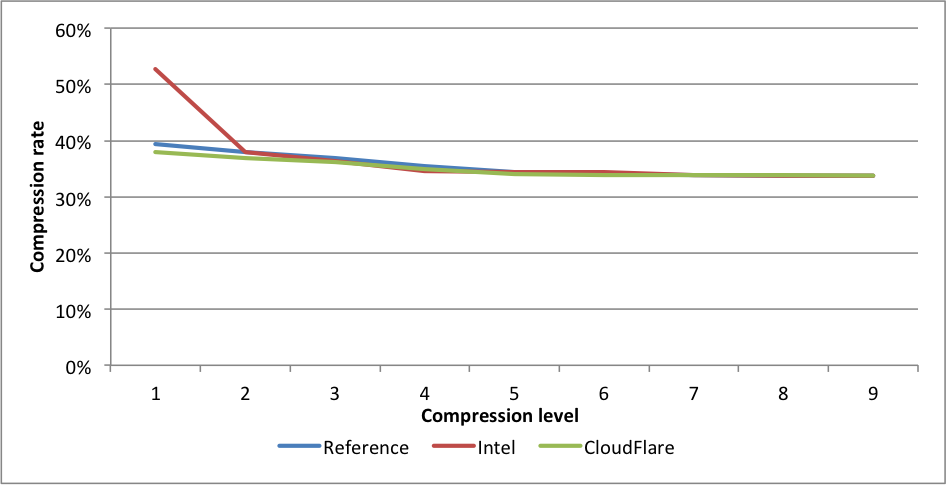

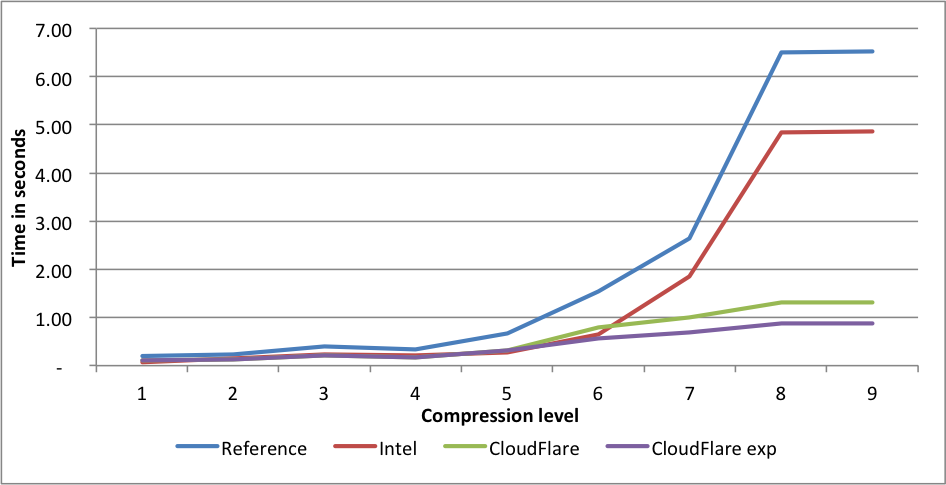

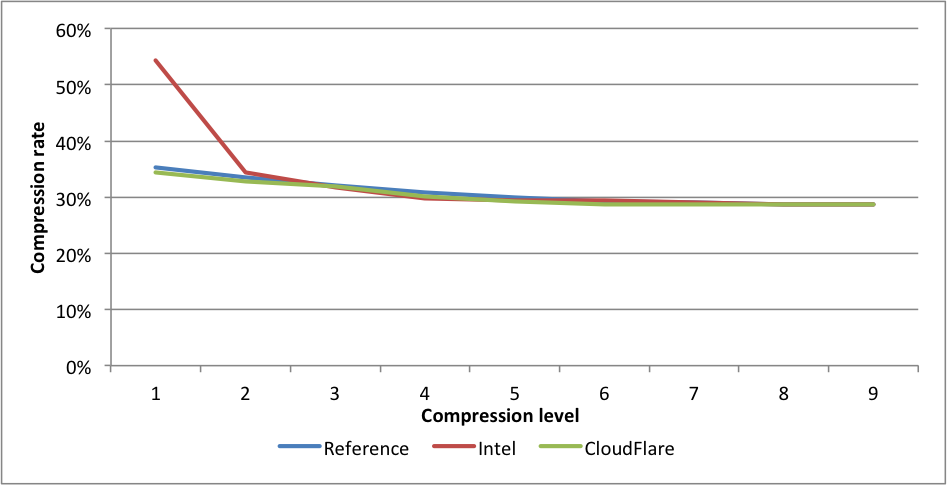

Compression rates:

Compression rates:  For this benchmark, Intel only outperforms our implementation for level 1, but at the cost of 1.39X larger files. This difference is far greater than even the difference between levels 1 and 9, and should probably be regarded as a different compression level. CloudFlare is faster on all other levels, and outperforms significantly for levels 6 to 9. The experimental implementation is even faster for those levels.

For this benchmark, Intel only outperforms our implementation for level 1, but at the cost of 1.39X larger files. This difference is far greater than even the difference between levels 1 and 9, and should probably be regarded as a different compression level. CloudFlare is faster on all other levels, and outperforms significantly for levels 6 to 9. The experimental implementation is even faster for those levels. Compression rates:

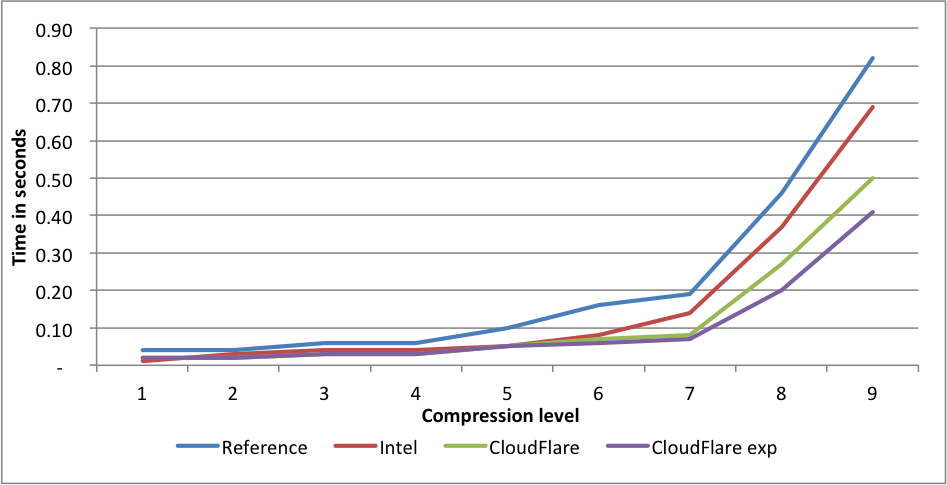

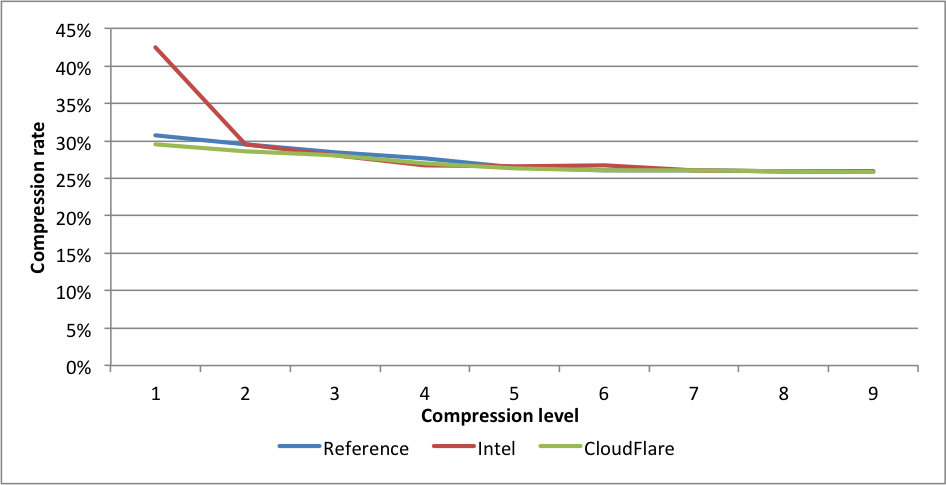

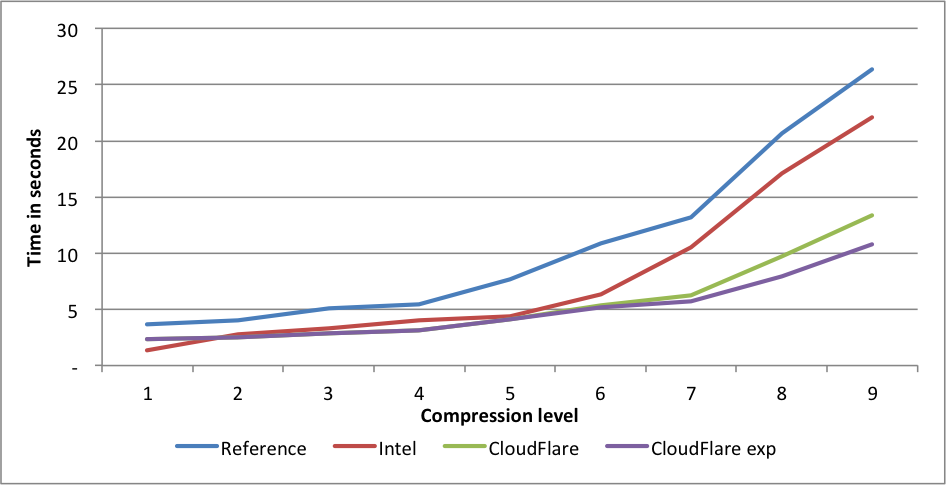

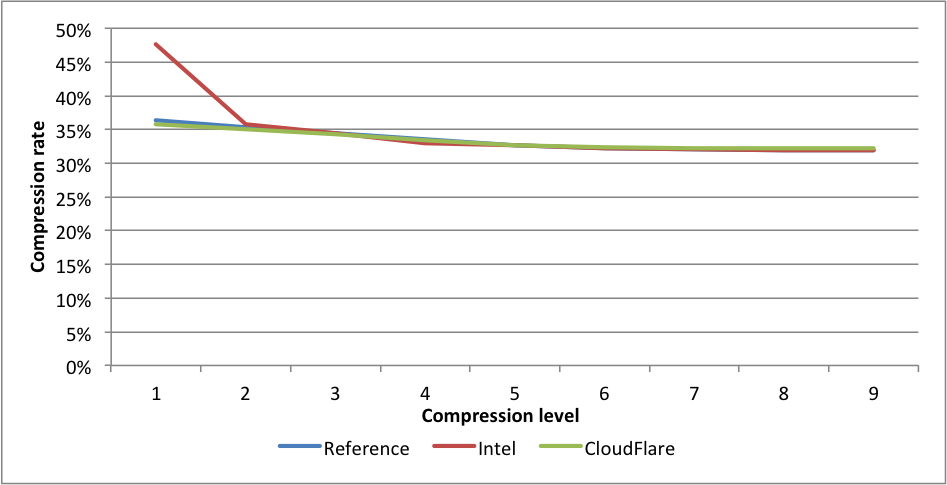

Compression rates:  Here we see a similar situation. Intel at level 1 gets 1.44X larger files. CloudFlare is faster for levels 2 to 9. On level 9, the experimental branch outperforms the reference implementation by 2X.

Here we see a similar situation. Intel at level 1 gets 1.44X larger files. CloudFlare is faster for levels 2 to 9. On level 9, the experimental branch outperforms the reference implementation by 2X. Compression rates:

Compression rates:  This time Intel is slightly faster for levels 5 and 6 than the CloudFlare implementation. The experimental CloudFlare implementation is faster still on level 6. The compression rate for Intel level 1 is 1.58 lower than CloudFlare. On level 9, the experimental fork is 7.5X(!) faster than reference.

This time Intel is slightly faster for levels 5 and 6 than the CloudFlare implementation. The experimental CloudFlare implementation is faster still on level 6. The compression rate for Intel level 1 is 1.58 lower than CloudFlare. On level 9, the experimental fork is 7.5X(!) faster than reference. Compression rates:

Compression rates:  Here again, CloudFlare is the fastest on levels 2 to 9. On level 9 the difference in speed between the experimental fork and the reference fork is 2.44X.

Here again, CloudFlare is the fastest on levels 2 to 9. On level 9 the difference in speed between the experimental fork and the reference fork is 2.44X.

"

"